The problem arose after being exposed to other interaction models in various VR games and applications that utilises physical interactions, which I felt heightens immersion.

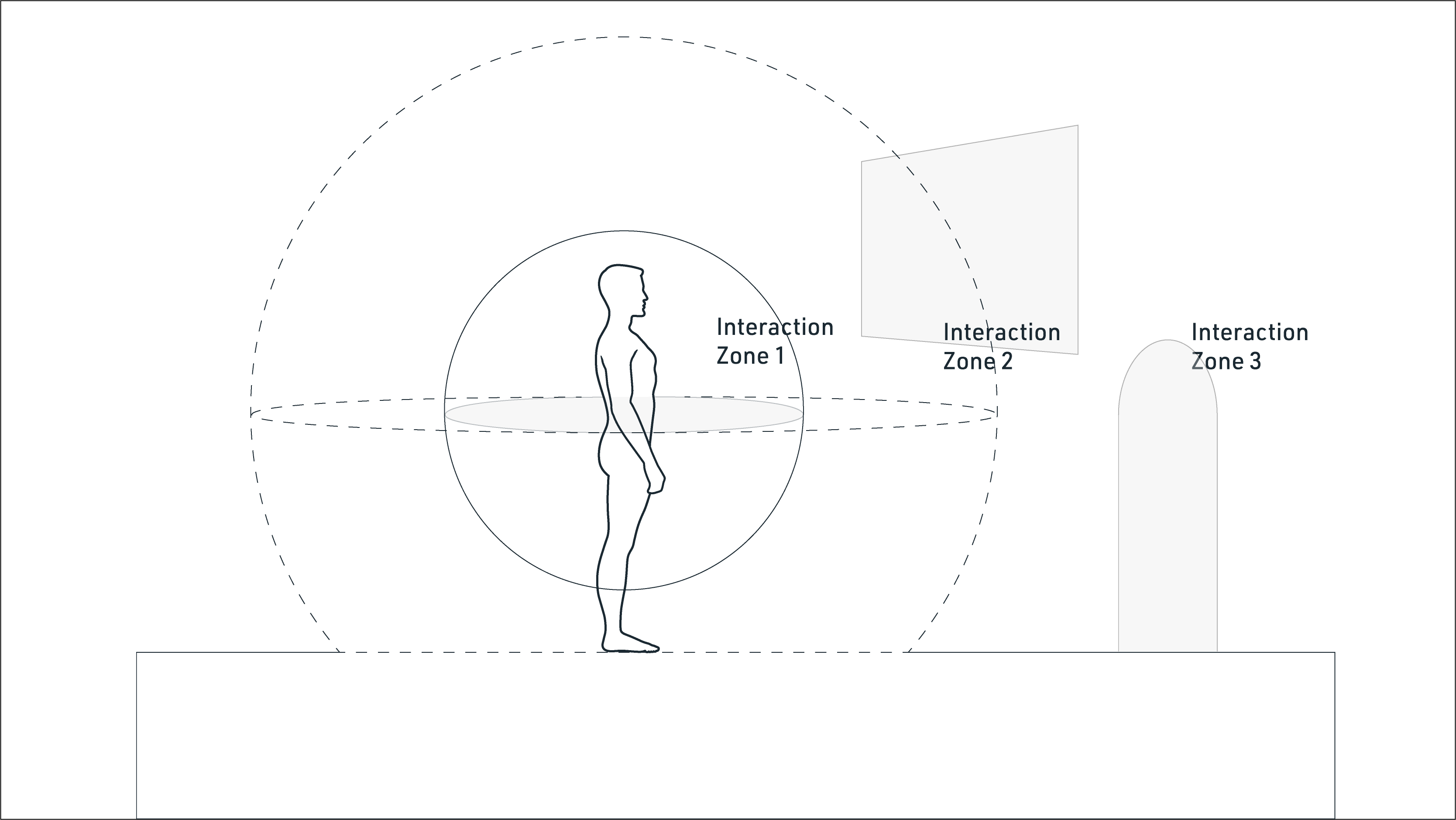

Meta home menu adapts many of their interaction behaviour from traditional 2D "point-and-click" screen interactions. Though this is an efficient and proven model, I took this opportunity to explore how it can be augmented with other interaction models in the pursuit of balancing immersion and convenience.